Share this Post

Artificial Intelligence (AI) algorithms have gained a growing influence over our society. It is not unusual to see this technology be used by banks to decide who is entitled to receive a loan, by human resources departments to select the best candidate for a job, and by health insurance providers to determine an individual’s premium, among other examples. These uses have the power to boost efficiency and promote corporate goals, yet they hold a myriad of legal and ethical considerations. Properly addressing these risks is not only a requirement of international laws and regulations but is also in the best interest of service companies seeking a competitive advantage.

Simply trying to eliminate bias isn’t enough to avoid the potential pitfalls. An algorithm must actively identify legal and ethical risks that may result from its application and act as a mitigating agent in these situations. It must allow for the scrutiny of diverse social groups and qualified professionals, such as engineers and computer scientists, ethicists, social scientists, workflow and operations managers, consultants, and attorneys. Implementing such practices may face hurdles, but offers in return varied benefits, including to the organization’s moral and legal standing, its reputation among consumers and its appeal to potential employees.

The growing use of AI technology is already an established trend. The services giant PricewaterhouseCoopers (PwC) expects global GDP to be 14% higher in 2030 as a result of AI. Moreover, according to Workana, a platform that connects freelancers with companies in Latin America, the search for data scientists has grown 7,300% in 2020 – a demand that may increase in 2021.

Yet at the same time, there is no universally agreed-upon definition of responsible or ethical AI. A report by The Economist Intelligence Unit (EIU), titled “Staying ahead of the curve: The business case for responsible AI,” gathered multidimensional perspectives on the benefits of the implementation of responsible technologies and AI systems for businesses and highlighted the potential commercial advantage of those who adopt a responsible AI in advance. It lists the key values in responsible AI design and application as: (1) transparency and explainability; (2) justice, fairness and non-discrimination; (3) doing social good and the promotion of human values; (4) avoiding harm; (5) freedom, autonomy and human control of technology; (6) responsibility; (6) accountability; and (8) privacy.

Value-added: Techlash, Compliance, Privacy

The development of a responsible AI, which follows the aforementioned principles, adds benefits not only from an ethical and moral point of view, but is also a source of medium- to long-term commercial advantage for organizations. Distinguishing itself as a company that promotes ethical practices can, for example, give an organization a much-needed leg up in recruiting technical professionals and retaining top talent – especially in times of increased demand for qualified developers.

According to the EIU report, ethically questionable practices are discouraging prospective employees from applying for jobs and opportunities, and are even prompting them to lose faith in the sector, contributing to the so-called “techlash” – a term that defines the public animosity and disbelief in relation to large technology companies. This suggests that pioneers in the application of responsible AIs will have an advantage in attracting sought-after employees and have an easier time keeping their recruits.

Ethical and responsible AI application also offers advantages when it comes to preserving and expanding one’s client base. It is highly relevant for companies to develop inclusive products and services, which perform more effectively across all user profiles, ensure safety and are transparent, as these features have the power to retain customers and increase the organization’s credibility.

In addition, developing a responsible AI is also important from a compliance perspective. More and more authorities are increasing their monitoring of AI applications and introducing regulations that incorporate standards and ethical considerations such as algorithmic impact assessments and auditing processes. Another example of these demands is the principle of privacy by design, which is already included in several privacy and data protection frameworks around the world.

In summary, integrating ethical and privacy-related concerns in applications of AI technology is a substantial challenge. Nevertheless, it is very much aligned with innovative trends in the global market and is becoming increasingly necessary in light of new regulations. Thus, organizations that will choose to pursue responsible AI development and application stand to benefit on a variety of levels and promote – rather than compromise on – their commercial objectives.

————————————————————————————————————————————————–

The Israel Public Policy Institute (IPPI) serves as a platform for exchange of ideas, knowledge and research among policy experts, researchers, and scholars. The opinions expressed in the publications on the IPPI website are solely that of the authors and do not necessarily reflect the views of IPPI.

Share this Post

What is the role of trust in Germany's AI Strategy?

In our everyday use of the term, we describe trust as a feeling or an emotion. For some,…

Does Foreign Electoral Interference Violate International Law?

Interference in democratic decision-making processes carried out by outside powers is anything but a novel phenomenon. Especially during…

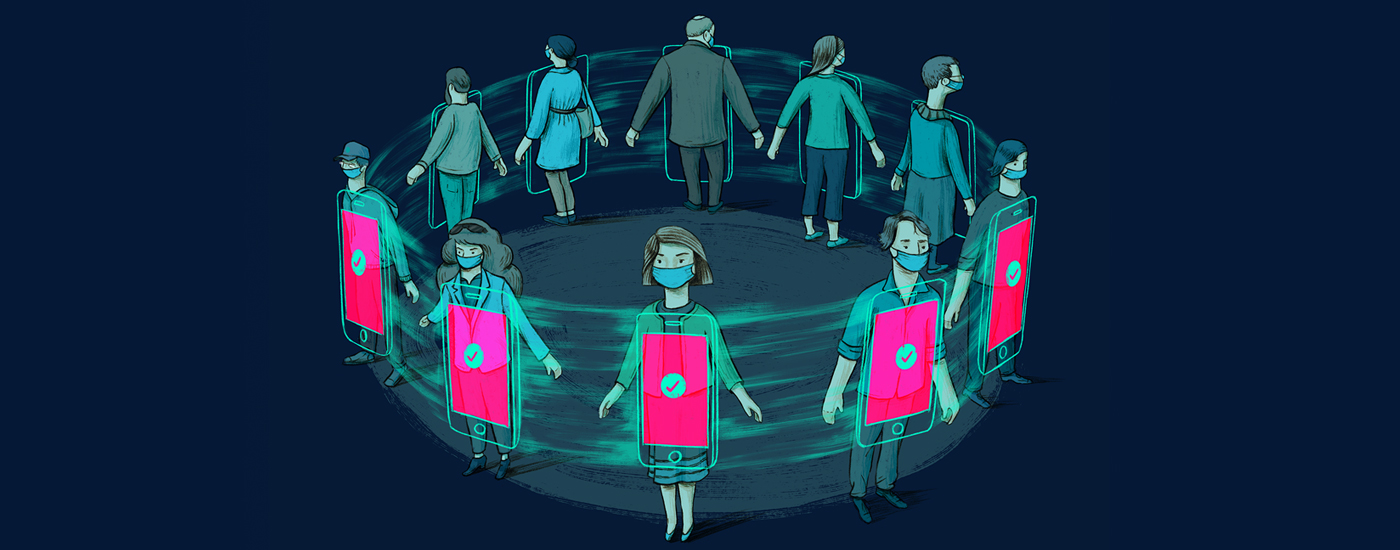

The German Corona-App: Expectations, Debates and Results

The German Corona warning app is a success story. This paper tries – within the confines of its…