The Weakest Link: Why we Cannot Look at our Information Environment Platform-by-Platform

Share this Post

Over the past year, as the gravity of the Covid-19 pandemic dawned on governments and people around the world, social media platforms vowed to make efforts to prevent the dissemination of misinformation that could harm the efforts to fight the pandemic. Over a year into the pandemic, and despite multiple policy changes and steps taken by main social media platforms, it is clear that the information environment in many countries (and specifically democracies) around the globe is polluted and flooded, producing a societal challenge in overcoming the pandemic. Recently the U.S. Surgeon General, Dr. Vivek Murthy, dedicated his first formal Surgeon General Advisory to Confronting Health Misinformation, calling on social media platforms such as Facebook, YouTube and Twitter to do more to confront the challenge.

Social media platforms such as Facebook, Twitter, TikTok, Instagram and others, and messaging apps, such as WhatsApp and Telegram, continue to constitute a hub of news and information consumption for the public in countries around the world. According to the Pew Research Center, in 2020, around 50% of Americans said they got their news often or sometimes on social media platforms, mainly Facebook and YouTube.[1] According to Oxford University, similar patterns can be seen in the United Kingdom, while in Germany, around 40% of people consume news from social media. Globally, Facebook, YouTube and WhatsApp are perceived as main social media platforms for news and information dissemination.[2] In Israel, news consumption through social media is even higher, around 60%, according to a survey by Bezeq, one of Israel’s largest telecommunication companies. However, at the same time, only 20% consider the information on social media to be reliable.[3]

Since January 2020, major social media platforms around the globe have reported taking multiple steps in an attempt to limit the spread of Covid-19 related misinformation. These steps have included the deplatforming of leading spreaders of misinformation, adding labels to misleading and false information, changing recommendation algorithms for health related content and other steps. Yet, based on continuous research and press coverage, policy makers around the world claim that main social media platforms are falling short in the fight to limit health misinformation.

The Challenge: Cross-Platform Information Pollution

No Platform is an Island

Reliance on social media platforms for news consumption presents challenges in the struggle against disinformation. Pew Research surveys from 2020 suggest that Americans who mostly rely on these platforms (close to 20% of those surveyed) were less knowledgeable about politics and the pandemic.[4] The content consumed by these users is not generated by the platforms themselves (i.e. Facebook, YouTube etc.); rather, this content is produced by other users/parties that reaches the platforms in the following ways:

- Content is posted/published on the platforms by other people, pages and accounts they are following.

- Relevant content is linked by friends, pages or accounts they are following. If a user is subscribed to a channel on YouTube, the platform then pushes similar content to the user.

- Content is recommended by the platform’s mechanisms. For example, when watching a video on YouTube, the site would recommend other videos based on its algorithms. When a user follows a given account on Instagram, often the app recommends similar accounts to follow. In both cases as well as in other platforms, the recommendation mechanism is designed to surface content that the user is most likely to favor.

Decontextualization of Credible Content

Having now established how online platforms have become an essential conduit in our information environment, we face the challenge of understanding which information they consume and from which sources (original content, mainstream media content, digital media). The ability to attach multiple links, images, videos and posts, and share them with the public, also provides interested actors the opportunity to build false narratives, while basing them on factual evidence and credible sources. Further, in our interlinked communication system, networks of activists, or state actors then try to amplify these narratives across our information environment. In other words, this environment is vulnerable to decontextualization.[5] However, the problem is not just with decontextualization of false information, but also in allowing actors to create disinformation by a manipulative implanting of factually accurate content, from one domain – not necessarily a platform – to another.

Amid the hyperlinked nature of our information environment, the protective actions taken by social media companies will continue to fall short, as they in most cases focus on content and mechanisms of dissemination on their own platforms, leaving a cross-platform domain vulnerable for abuse by malign actors. Through the pandemic we have seen numerous instances of this tactic, as malign actors managed to use credible news reports, and even medical and scientific papers to build false and sometimes even dangerous narratives.

Case Study: Abusing News Reports by Cross-Platform Decontextualization

Phase I: From a Credible Local News Report to Anti-vax Conspiracy Online

During December 2020, media outlets around the world covered the first rollout of the mRNA Covid vaccination. In Chattanooga, Tennessee local media covered vaccination operations of the staff at a local hospital. As one of the nurses came to speak with the press after getting her first dose of vaccination, she fainted in front of the cameras. The news segment, which was uploaded online to YouTube, was quickly picked up by over a dozen anti-vax pages and accounts on Facebook and Instagram, and gained millions of views on YouTube, Instagram and Facebook within a couple of days.

Though a separate video segment shows the same nurse explaining later in the day that an underlying condition caused her weakness and that this has happened to her in the past, for anti-vax communities, this incident, occurring in front of the cameras, served as a proof of the hazards of the new vaccine. For example, a day after the incident, rapper Lil Duval shared the video segment to his 1.2 million followers on Facebook with the hashtag #nocando. Nothing in his post was manipulated or incorrect, but its message was grossly misleading; the post remained online for months after the incident with no mention of the fact that the video was taken out of context.

Traditional media coverage (TV newscasts and news websites) reported this incident while providing the context about the cause for the fainting and the general operation of vaccine administration given to thousands of people that day. In contrast, the current information environment allows users (and influencers) to spread the separate segment without mentioning any of that context. In decontextualizing, anti-vaxxers provided an alternative set of facts about what had happened that day in Chattanooga, while managing to avoid making any false statements. They decontextualized a segment that was taken from a credible local news outlet (through YouTube) and added their own interpretation, fitting the event to the anti-vax narrative. The activists did not have to falsify footage or even to make libelous claims. All they had to do was to copy a link of the video segment from YouTube to another platform and write a short text raising questions about the vaccine. These trivial steps were not imaginable, let alone possible, three decades ago. The hyperlinked information environment allows us not just to be exposed to many more voices, but also for actors of varying motives to create an abundance of narratives with different shades of truth.

Phase II: Not Just Social Media – (Ab)Using Multiple Platforms and Services

The story got another twist several days later, when anti-vax and anti-science accounts started to disseminate content attempting to prove that not only had the nurse become seriously ill after the incident, but that she subsequently died, and that the authorities were trying to prevent the story from be exposed. To make this claim, they shared “information” from Ancestry.com and searchquarry.com, supposedly proving the nurse had passed away. After the media reached out to the websites, it appeared that the information was false, and was probably intentionally uploaded as a hoax.

Though after several days moderation efforts by the platforms did flag some of the posts and videos that created the narrative, and even took some of them down several days after they went viral, some of them survived even six months after, and the conspiracy still lives despite interventions. Moreover, some of the videos that were taken down from YouTube were replaced by copies that were uploaded to other video platforms (such as BitChute and OurTube), allowing anti-vax and conspiracy theorists to share content again on other platforms through links. More than six months after the incident, these posts, tweets and videos appear online with no disclaimers about their lack of credibility.

Case Study: Turning Science Upside Down

Access to and discovery of information is at the core of our online ecosystem. This includes enhanced access of researchers and the public to scientific studies through open access journals, dedicated search engines and even preprint servers, which allow the public to access scientific studies even prior to the peer-review process. Since the beginning of 2020, numerous scientific studies, both before the peer-review process and after, were reported and shared by media outlets, and through social media. Many of these reports were spread widely, often linking to preliminary versions of studies that were uploaded to preprint servers such as MedRxiv, and BioRxiv. In the urgency of responding to the pandemic, global interest in related research, even in its preliminary form (prior to peer-review) process is understandable. But the decontextualization of scientific studies at times even undermined consensus among scientists.

Phase I: Abusing the Science Publication System

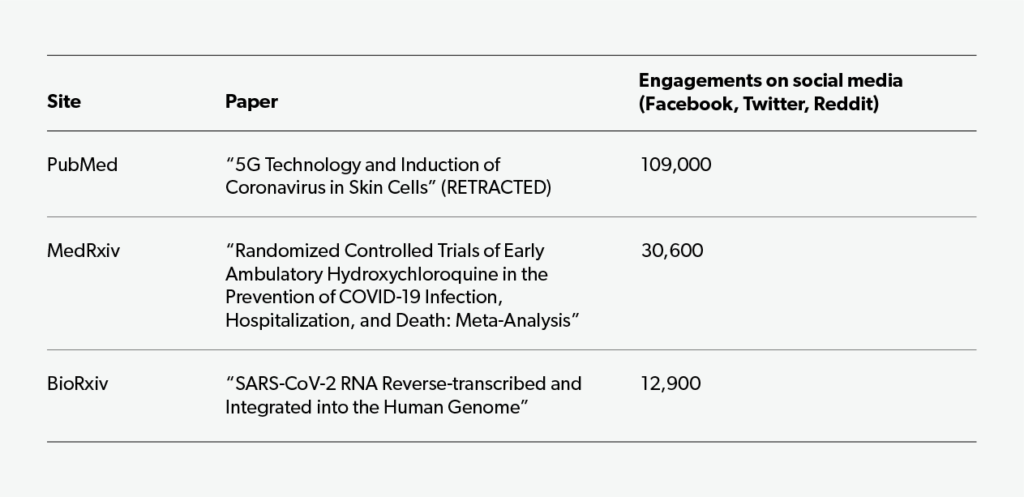

There are several major open-access websites containing Covid-19 related medical scientific studies. Among them (1) PubMed: The U.S. NIH’s search engine for studies catalogued in the National Library of Medicine; (2) MedRxiv and (3) BioRxiv: preprint servers for medical and biological scientific studies (respectively), allowing researchers to submit manuscripts ahead of peer-review process. The table below shows the most accessed and shared URLs from three different major medical science sites from June 2020 to June 2021.

Cherry picking of scientific studies is not an invention of the internet era. However, the current information environment is not just allowing this phenomenon, but making it easier than ever before. With unprecedented ease, users can pick the study they wish to use, copy-paste the URL, provide new context as their wish, and make the claim that they are “backed by science,” while avoiding any data that does not fit their point of view. Finally, they can disseminate it far and wide using social media platforms.

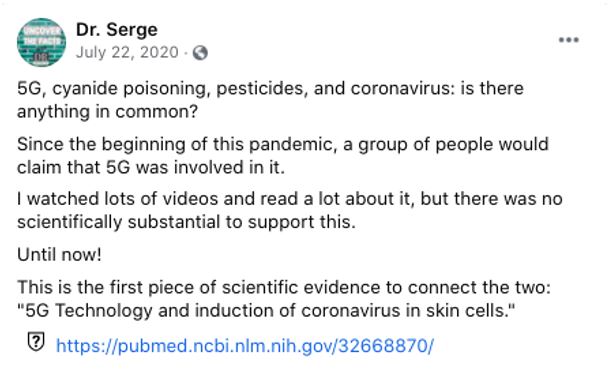

Posts containing PubMed links wishing to spread disinformation will often add an “alternative context,” either ignoring consensus around the topic, or trying to “debunk” it, providing a link to a single study to claim they are “backed by science.” According to an AFP report, the 5G paper was retracted, yet gave a boost to a wave of false claims about the links between 5G technology and Covid-19. It was also cited on dozens of blogs wishing to amplify the false conspiracy theory around 5G technology. Some of the most popular posts attached the link as scientific evidence to the baseless argument. This is not a unique example. Over the past couple of years, multiple retracted papers related to mask wearing, alternative treatments, and homeopathy solutions for Covid-19 and other issues were shared across social media in an attempt to persuade the public that most scientists and health authorities are wrong, or hiding the truth. In all of the three cases mentioned above, the papers were linked by accounts on social media, in an attempt to make false claims about Covid-19 and potential treatments. For example, the paper “5G Technology and Induction of Coronavirus in Skin Cells” was published by “The Journal of Biological Regulators & Homeostatic Agents” and was shared and liked on social media over 100,000 times over the past year, in posts providing the PubMed link.

Phase II : Providing New Context, Ignoring Reality, and leveraging Science Illiteracy

Picture by Adi Cohen / All Rights Reserved

Conclusions: Big Tech and More

The case studies presented above illuminate the challenge of tackling misinformation in our current information environment. Content that is shared on different communications platforms is often produced elsewhere. Further, the architecture of the online domain allows us to curate different pieces of information (often factual) to create a new framing of the facts and share it publicly online. In other words, not just to “decontextualize,” but also to re-contextualize a piece of information as we wish, and in so doing, to create a new version of truth. The accessibility of content and the relatively effortless process in which it can be edited or doctored, brings us to a point of “information pollution”, where the public is flooded with content, through social media and communication platforms. Those platforms allow us, the users, to amplify our personal “version of truth”, hence we expect them to take more proactive measures in fighting the spread of misinformation.

However, a significant part of the challenge lies in the spaces that are outside of “Big Tech” platforms’ jurisdiction. The case studies that were discussed above show that while companies that are responsible for a large part of public communication online such as Google, Facebook, Twitter and others, should take more proactive measures to fight the spread of misinformation, more attention should be given to other repositories of information online.

[1] Shearer, Elisa and Mitchell, Amy, “News Use Across Social Media Platforms in 2020,” Pew Research Center, Jan. 12, 2021

[2] Reuters Institute Digital News Report 2020, https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2020-06/DNR_2020_FINAL.pdf

[3] Leizerovitch Keren, Internet Report 2020 (Hebrew, Bezeq, January 27, 2021. https://media.bezeq.co.il/pdf/internetreport_2020.pdf

[4] Mitchell, Amy et. al., Americans Who Mainly Get Their News on Social Media Are Less Engaged, Less Knowledgeable, Pew Research Center, July 20, 2020. https://www.journalism.org/2020/07/30/americans-who-mainly-get-their-news-on-social-media-are-less-engaged-less-knowledgeable/

[5] P. M. Krafft & Joan Donovan (2020) “Disinformation by Design: The Use of Evidence Collages and Platform Filtering in a Media Manipulation Campaign,” Political Communication, 37:2, 194-214, DOI: 10.1080/10584609.2019.1686094.

The opinions expressed in this text are solely that of the author/s and do not necessarily reflect the views of IPPI and/or its partners.

Share this Post

Pathways towards sustainable urban mobility

Tel Aviv’s mobility policies in global perspective Israel’s transportation policy is characterized by a strong focus on motorized…

Beyond Unicorns: How the Start-Up Nation could get Smart Mobility right

Israel has become an innovation hub for smart mobility. Its unique mix of entrepreneurial spirit and software expertise,…

Smart Mobility: Shaping a New World

The mobility sector is changing dramatically. The invention of the car in the late 19th century and its…